Training at VSC - Simeon Harrison

Supercomputing and AI for Everyone: Training at the Vienna Scientific Cluster

High-Performance Computing and Artificial Intelligence offer huge opportunities for businesses large and small. But how do you get started – and what do you do afterwards? Thanks to the EuroCC project, it is now particularly easy and cost-effective to receive training in supercomputing and AI, says Simeon Harrison, a trainer at Austria's supercomputer, the Vienna Scientific Cluster.

Interview by Bettina Benesch

Simeon, you offer various trainings and webinars at the Vienna Scientific Cluster (VSC). Who is your target audience?

We are here for anyone who wants to approach the topic of high-performance computing (HPC) and artificial intelligence (AI) or deepen their knowledge – regardless of their level. We offer training for beginners and advanced users and also have offerings in the intermediate range, which we will continue to expand.

Do you plan to expand the programme thematically as well?

New courses on the topic of deep learning are in the works. Also we are currently establishing a cooperation with Poland, Slovakia, Czechia, and Slovenia to bring in more experts. Generally, we’ll give more focus to the the topic of AI, and the training programme will expand both in breadth and depth.

Where do the trainers for your courses come from?

Claudia Blaas-Schenner established the training centre at the Vienna Scientific Cluster in 2015 and has been the head of the training programme ever since. She has been involved in HPC for 30 years and is also responsible for all the courses that run at the EuroCC Austria competence centre. Claudia is an expert in Message Passing Interface (MPI) and conducts all courses related to it (MPI = a standard for message exchange in parallel computing on distributed systems).

“

We offer training for anyone who wants to learn supercomputing and artificial intelligence – regardless of their level.

„

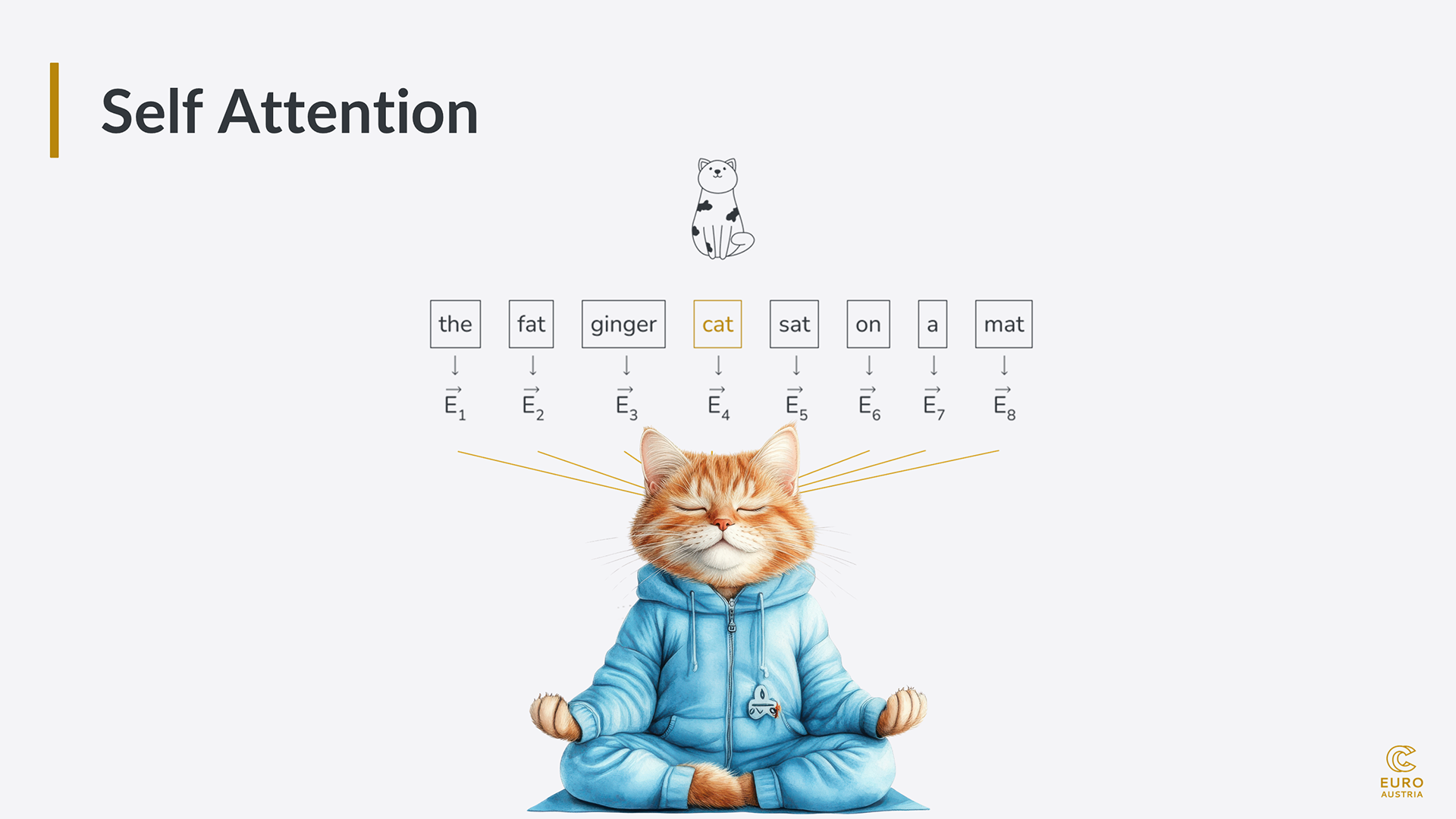

I support her and also lead my own courses, such as the beginner course Linux Command Line, the intermediate courses Python for HPC, Introduction to Deep Learning, or Large Language Models on Supercomputers. Additionally, other colleagues in the team conduct trainings on GPU or C++ programming. In the introduction course for all new VSC users, we also have other trainers to offer more variety in topics and to address very specific questions.

What do your courses cost?

The courses funded by EuroCC are free for people working at universities or companies based in the EU and associated countries to the Horizon 2020 programme. There are other courses at the VSC that are not part of the EuroCC project, such as the C++ course. For attendees coming from the business sectors, fees apply, but they are very moderate.

Where does Austria stand in terms of HPC training?

Believe it or not, Austria is actually at the forefront of HPC education. We are one of the major training centres in Europe.

When you think of researchers or companies getting into HPC, what are the biggest challenges they face?

The first major hurdle for people who haven't worked with HPC before is that the machines are Linux-based. This means you first have to get familiar with Linux. We cover that in our courses. Once you've mastered that, the rest is relatively easy. We also offer a Linux course for all users of our supercomputer to help overcome this challenge. Once that initial hurdle is cleared, it's a very efficient and fast way to achieve your goals.

“

Get to really know your code. Pay attention to where the bottlenecks are and which sections of code take how much time.

„

What are your tips for people just starting or wanting to start with HPC?

Anyone who knows the basics of the Linux command line is already on the right track. Another tip from me: Get to really know your code. Pay attention to where the bottlenecks are and which sections of code take how much time.

It’s also important to familiarise yourself with benchmarking. This involves gathering metrics: Which sections of code take how much time, where are the bottlenecks, what takes an especially long time, and which hardware resources do I need?

By working on this, you start to get an idea of what can be achieved with HPC and what is feasible. This also helps to develop a sense of how many resources are required on a supercomputer. If you request too little memory or allocate too little time, you risk the infamous OOM (Out of Memory) or the equally common job timeout. In that case, it’s back to the start.

How do you keep your knowledge up to date?

I'm quite old-school in that regard: I love books. I do use Google and various forums as well, but I actually prefer to get my fundamental understanding from books. However, since the field develops so quickly, printed books or even e-books often can't keep up. That's when it helps to explore various platforms. Hugging Face is currently huge in the AI field, Nvidia, the hardware manufacturer, offers a lot, and there are other resources like TensorFlow Hub or PyTorch Hub.

I also frequently find publications and corresponding code on Papers with Code. Articles and explanations on various topics are published on Towards Data Science, and I’m subscribed to a few well-known names on YouTube. All of this together keeps me reasonably up to date.

I need to emphasize, though, that it's not enough to read 100 books or memorize all the content on Hugging Face. You have to try out what you've learned yourself so that you learn by failing. My motto is: “If in doubt, code it out!” — meaning practice is more important than theory. It’s, of course, helpful to work on a specific problem. For example, I am involved in various customer projects at EuroCC Austria, through which I also gain a lot of practical experience.

Was there anything that surprised you when you first started working with HPC?

When I started working at EuroCC Austria and saw the supercomputer for the first time, I was a bit underwhelmed. The newer the machine, the smaller it is. I imagined a huge hall with hundreds of racks where you can hardly see the other end. I think the average closet space in a villa in Hietzing is larger than a supercomputer. What then further surprised me was how complex the cooling of these devices is. That really takes up a lot of space.

What fascinates you personally about HPC?

What fascinates me the most is that we are witnessing an industrial revolution. A lot is likely to happen in a very short time; probably more than in any of the previous industrial revolutions. I find it exciting and inspiring to be part of this process and right at the forefront – even if you only play the role of a tiny cog.

What also fascinates me is that you come into contact with so many different scientific disciplines, whether it’s materials science, engineering, or the humanities. I like that I get to experience a bit of everything.

Short bio

Since 2021, Simeon Harrison has been working at the national competence center EuroCC Austria to spread knowledge about High-Performance Computing, Big Aata and Artificial Intelligence in Austria and Europe. Sharing knowledge has always been Simeon's passion: before he dealt with supercomputers, he taught high school students everything about polynomials, asymptotes, and integral functions. At EuroCC, he specialises in the booming field of deep learning/machine learning.

Training at the Vienna Scientific Cluster (VSC)

Austria’s flagship supercomputer, the VSC, was put into operation in 2009. The first training events were held in 2015 and were fully booked immediately. Today, the VSC offers around 35 different courses annually, from the VSC intro course to MPI and C++ to Python for HPC and large language models. About 1,200 people attend the events each year.

The courses are free for all VSC users and employees of Austrian universities or anyone in Europe as long as the course is co-financed by EuroCC. Only for courses not covered by EuroCC do participants from the industry and non-Austrian participants pay a small course fee.

The VSC training programme: events.vsc.ac.at

About the key concepts

Believe it or not, High-Performance Computing (HPC) is actually a relatively old concept: the word "supercomputing" was first used in 1929, and the first mainframe computers appeared in the 1950s. However, they had far less capacity than today's mobile phones. The technology really took off in the 1970s.

HPC systems are used whenever the personal computer's memory is too small, larger simulations are required that cannot be run on the personal system, or when what was previously calculated locally now needs to be calculated much more frequently.

The performance of supercomputers is measured in FLOPS (Floating Point Operations Per Second). In 1997, a supercomputer achieved 1.06 TeraFLOPS (1 TeraFLOPS = 10^12 FLOPS) for the first time; Austria's currently most powerful supercomputer, the VSC-5, reaches 2.31 PetaFLOPS or 2,310 TeraFLOPS (1 PetaFLOPS = 10^15 FLOPS). The era of exascale computers began in 2022, with performance measured in ExaFLOPS (1 ExaFLOPS = 10^18 FLOPS). An ExaFLOPS equals one quintillion floating-point operations per second.

As of June 2024, there were only two exascale systems in the TOP500 list of the world's best supercomputers: Frontier at Oak Ridge National Laboratory and Aurora at Argonne National Laboratory, both in the USA. In Europe, there are currently three pre-exascale computers, which are precursors to exascale systems. Two European exascale systems will be operational shortly.

VSC (Vienna Scientific Cluster) is Austria's supercomputer, co-financed by several Austrian universities. The computers are located at the TU Wien university in Vienna. From 2025, the newest supercomputer, MUSICA (Multi-Site Computer Austria), will be in use at locations in Vienna, Linz, and Innsbruck.

Researchers from the participating universities can use the VSC for their simulations, and under the EuroCC programme, companies also have easy and free access to computing time on Austria's supercomputer. Additionally, the VSC team is an important source of know-how: in numerous workshops, future HPC users, regardless of their level, learn everything about supercomputing, AI and big data.

EuroHPC Joint Undertaking is a public-private partnership of the European Union aimed at building a Europe-wide high-performance computing infrastructure and keeping it internationally competitive.

EuroCC is an initiative of EuroHPC.

Each participating country (EU plus some associated states) has established a national competence centre for supercomputing, big data and artificial intelligence – EuroCC Austria is one of them. They are part of the EuroCC project, which brings technology closer to future users and facilitates access to supercomputers. The goal of the project is to help industry, academia and private sector adopt and leverage HPC, AI and high-performance data analytics. EuroHPC also supports the EUMaster4HPC project, an educational programme for future HPC experts.