Preparing data for ML

Preparing your data for Machine Learning – a look inside the process

The majority of time for developing a machine learning model is actually spent on data preparation.

Preparing data is a critical step in the machine learning process which maximises the chances of building accurate, robust and generalisable models that can make informed predictions and decisions.

In the course Data Analysis and Data Preparation for Machine Learning we will look into the proven methods of pre-processing data and introduce state-of-the-art tools and libraries to tackle various problems.

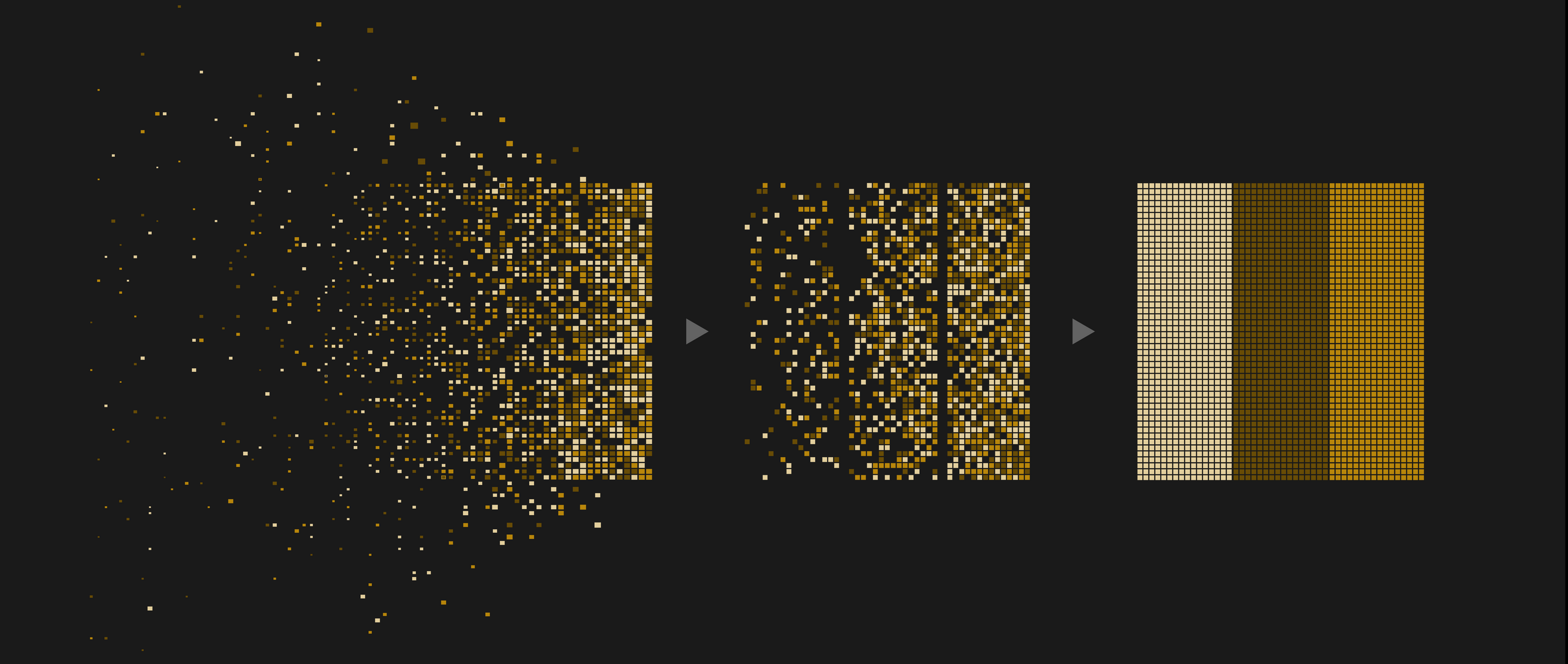

How does the process of preparing the data look like?

Let’s say you would like to train a machine learning (ML) model to diagnose a disease from medical laboratory parameters.

You’ve collected vast amounts of patient data. Now you want to make sure your data is consistent and complete.

1. Import your data into a Pandas dataframe to conduct a preliminary analysis. Here you get rid of double entries, use the mean instead of missing values and check for outliers.

Since you have a lot of parameters to work with, you are not sure if all of them are actually important for your ML model.

2. Make a correlation test in Pandas.

The result is overwhelming?

3. Let’s go for a heat-map created in Matplotlib to visualise the outcome.

Now you can see which features are important for your model and which are not. This is called feature engineering and is a very important step in machine learning.

After you’ve dropped the unnecessary information, you need to turn all your data into numeric values. Some of the information, such as the sex of the patients or whether they are smokers or not are non-numeric values.

4. Pandas can easily one-hot encode these entries, so you can actually use them to train your model.

5. Next, you pass your dataframe to a NumPy array, normalise all data and split it into a training, evaluation and a test set.

Finally, you are ready to build a machine learning model.

However, your project supervisor just came up with some more patient data form a collaborating hospital.

As you go through all the above steps again, you realise that your data no longer fits in memory!!!

But do not despair: The wonderful Python library called Dask is made for exactly this problem.

6. In Dask your data gets divided into smaller, more manageable chunks and are then run in parallel. This makes it possible to handle data that is larger than memory.

That's it!

These are the most probable steps you will need to take to get your data in a suitable shape before feeding it into ML algorithms further down the line. Get straight down to preparing your data with us on 6 September 2023!

The participants of the course will learn about pre-processing of data, the typical challenges and how to overcome them, and will get to know powerful Python libraries such as Pandas, NumPy, Matplotlib and Dask.